Recently, one of the companies I work for switched across to Dovecot as the email daemon of choice opening up a host of possibilities for new advancements. Compression is one such advancement since Dovecot supports it transparently. But you can’t just enable something that will change hundreds of millions of files without asking some questions first. It can all be summarised as Speed, Speed, and Speed. Read on…

Background to the testing

As with all testing, it begins at the individual mailbox level. My initial tests with mailbox compression consisted of first tar’ing my own mailbox, the gziping that. Then gziping the individual files in the Maildir. Next I tried my archive mailbox where lots of stuff gets dumped to keep it off my primary mailbox. Immediately a problem came up. The compression ratios between two mailboxes varied quite a bit, one at 10%, the other at 30%. Obviously I needed a bigger corpus to test upon.

Given that these email systems I had access to in my new job are a slight bit bigger than what I had access to previously, I decided to take one of the backup snapshots and work off it. And so went the weekend.

Testing Size and Methodology

This data set is a subset of a subset of a subset. It was originally meant to be a single disk from one server but due to some unseen complications (read: a stupid scripting error), it covers about 60-70% of that set. And to fend of the inevitable questions, a single disks it just a crude segmentation system in use to partition email. The reality is that the disk is from a NetApp filer, so multiple spinals are in place.

The data set covers a few hundred active accounts, some pop, some imap, some webmail. No attempt was made to count which was which, or should it really make a difference to the overall conclusion. (Hint: compression is good)

Overall a total of 2,625,147 emails which processed having a size of 399,502,115,126 bytes (Appox 372 Gigabytes). (An aside, this does give an average email size of 148 Kilobytes which is probably above average. It may be possibly skewed by some of the large emails. Further look at this below).

My testing method was to scan the backup snapshot for all emails using the following logic.

foreach email

copy to ramdisk

measure size

gzip, bzip at 1/5/9 compression ratios

measure size of compressed files

measure time to read back the compressed files

cleanup and save results

Given that the files were all being worked on in the ramdisk, disk latency can be ruled out. This was especially important given the source of the files was a file system snapshot. The machine in question also maintained a load under 1 the whole time. It is a multi-cpu system as well, so no cpu wait timing should have occurred.

Goals

I went into this with a few goals set. My main one was to figure out if compression would actually be a good idea. At 10%, the savings wouldn’t be huge compared to the overhead that compressing stuff would bring. Especially given the time to setup and maintain. At 30%, it is a different matter. I wanted to know what the actual average would be over a larger set since obviously my mailboxes were much too different.

Next goal was to produce some graphs. I kid you not. It has been a while since I’ve created something fun, but graphing compression ratios seems like a nice way to do it. Its no MapMap, but its a start!

Next in line was figuring out the overheads. I knew that bzip2 had a larger overhead than gzip does but produces much higher compression ratios. Perhaps there would be a point where using bzip would make sense over gzip. Perhaps there was even a point that emails would be too small to justify any compression at all. Who knows, but the averages from this are ideally suited to a graph.

Results

Speed 1 – Compression speed

While I did measure the overhead while compressing the files, the original logic was that compression would happen solely as an offline task on cpu-free hosts. As such a 2000% overhead wouldn’t matter much provided the host could keep up with the daily volume. Dovecot does support realtime compression via both imap and the LDA, so perhaps this is something I’ll come back to.

What I did set to measure was the impact running the script would have on live or active mailboxes.

The script works roughly as follows

find all mails in $PATH without Z flag and with the S= set

if file is already compressed

add the Z flag

else

run the compression

Two things to note, running the find to ignore mails with the Z flag greatly speeds up things. This is due to the fact that dovecot will drop the Z flag when moving a compressed email between folders. That is also why it is necessary to check if the file is compressed before operating. The find itself is also quicker than expected given a hot FS cache.

When compressing, I choose to run maildirlock only around the moving of compressed emails. This means the mailbox isn’t locked for extended periods – it is not uncommon to hit a folder with tens of thousands of mails (or a few large mails) and drastically increase the time taken to process a folder. The benefit of this approach means that users won’t notice a compression cycle running against their folder.

To demonstrate a worst case scenario, I took a folder with 65000 emails. This was loaded up in webmail (Roundcube) and I started selecting random emails. A compression cycle was then started across the folder. Lastly, I started a script with connected in over imap and started moving emails out of the folder. Surprisingly nothing lagged by any measurable amount.

Speed 2 – Compression speed – Readback

The overhead while reading back compressed files was an area I definitely wanted to measure. If compressing an email saved 50% disk space but increased read latency by 50%, then would it be worth it? What about 40-60? How much additional slowness for the user would be okay? Would the extra files in cache offset any introduced slowness on a larger sample size?

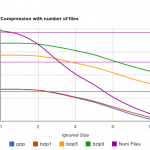

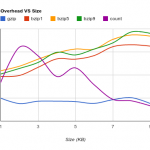

As mentioned above, the tests involved operating on a RamDisk to rule out any disk latency. This means the measurements here are purely for the compression overheads, i.e. cpu usage lag. My hope was to measure a percentage low enough that it could be justified by have more data in cache and thus reduce disk latency. What was found was even more promising.

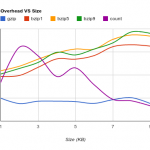

As the graph shows, gzip really doesn’t add much overhead throughout the sizes until the files get large. However shockingly, gzip actually operates quicker than simply reading the file, even on the RamDisk, in certain cases, usually large files. Results like this would easily help the case but the averages obviously go both ways. And the average overhead of 25.8% is easily eaten up by the extra data in cache by the reduced sizes of the emails. In fact, on some tests with larger emails, we were getting responses back via imap for compressed mails quicker than uncompressed mails even before the cache became useful. Less data to read from the disk meant it returned the data quicker. Definitely something that will need further work.

Overall, since it is usually disk IO that runs out before CPU on a mail server, this really comes down to trading some CPU for reduced disk IO.

Speed 3 – Backup Speed

Since files change once, there is a large enough hit then. The same with space if you lag compression instead of doing via the lda. Ideally you’d be able to differentiate between active pop users (i.e. those that down all mail without leaving a copy on the server)and everyone else. An active pop user probably doesn’t need compression although the benefit of less date to read if the mails comes into play. The real benefit of doing it through the LDA is that your backups will only grab one copy of an email, not the pre and post compression one.

The real improvement for the backups comes with the lower data sizes. Lower data means more ends up in the file system cache. Doing a backup from something like rsync, more folder entries will remain hot allowing it to scan them much quicker. Never underestimate the importance of the FS cache. On a slower testing box, a different of 20-30 seconds can be seen between a cold read (i.e. non cached folder entry) and a hot read of a large (~100k mails) maildir.

Lower individual mail sizes only really play a part if your backups are restricted by your link speed or in a remote location. They will play a part for rsync’d MDBOX folders since these can be large in size. Compressing the MDBOX is much more efficient than doing differential block syncs with rsync. Only time is when the MDBOX purge is run. Depending on the users delete pattern, the chunk size of the MDBOX, and the lattency, the differential option can end up faster for these.

If you are only doing the purge say weekly, then try running it on your backup host before the sync after your live system. If your users are delete happy, it can help quite a bit.

Some Conclusions

Firstly sysadmin mailboxes are not a-typical mailboxes. Upon testing my own from different mailboxes, I got wildly different results. One had an >80% compression rate, another of <10% with a huge overhead. Yes some of your users will match these profiles (video storage on email anyone?), but it is unlikely you’ll hit a mailbox with >100k mails of pure nearly identical text (a nagios archive).

Once I had the script running on a much large set the averages starting floating around the same point. Things were within 3% of the final average after around 25 mailboxes which was somewhat surprising.

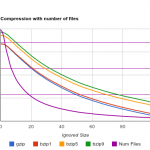

To be honest, with the graphs I was really hoping for an aha graph. One which had a really clear crossover point to point to and go, yup, that is the correct figure for X. But since I didn’t know what X was, it was hard to produce such a graph. I had also forgotten just how much moving the axis affects things, and how much you can adjust things to fit the answers you want to see. More so defining which cross over point you actually want to see also affects the outcome. Do you want to come the overhead of compression against the data in cache? Well then how much cache do you have now because that matters.

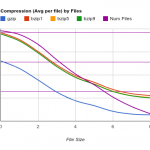

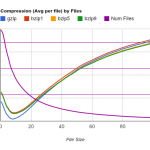

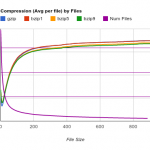

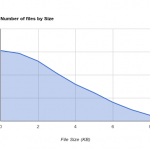

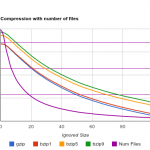

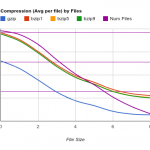

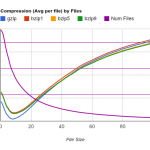

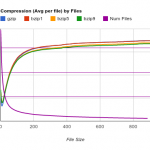

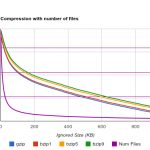

One example of the different cross over points is in the following graphs. They cover compression vs the size of mails. The crossover changes drastically on the small and vsmall graphs.

But then that isn’t an overly useful cross over. At least not looking at it. Originally I wanted to see if there was any points where compression wouldn’t make sense. Combining the information with the overhead graphs above, it is clear that compression makes sense everywhere from a read-back point of view.

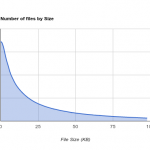

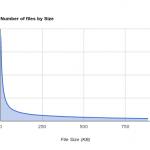

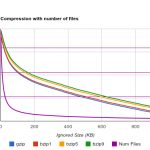

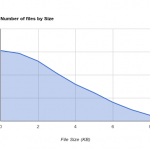

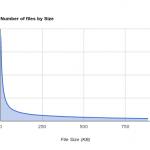

How about if we look at the distribution of file sizes?

There are more small files than large files. This makes a difference if you lag your compression since you can ignore the smaller files and still get most of benefits of compression in a shortly time. Just how much?

What was really interesting if just how much space you can save by only compressing large files, and by large I mean > 1 Megabyte large. If you ignore everything smaller than 500KB you still get a 28% compression rate with gzip VS an overall rate of 38%, and that is with skipping well over half the data set. Not bad at all.

But what should you choose to ignore? Well the difference between doing everything and ignoring say 2KB is within the area of data errors. Basically it probably isn’t going to do anything to your compression rate but will help with the speed of your compression runs. Where you go really depends on how cold your data will be. If you are going to do it via the LDA then you will automatically hit everything, and frankly I doubt there is a need to exclude things at that stage.

My Recommendations

In simple terms the best I offer is

- Turn on compression at the LDA.

- Use gzip with a middle setting (5/6) unless you know your mail is in some way different (think archiving with attachments stripped, but even then the midway works pretty well).

To back compress your data, start with a high exclude value, let your backups catch up then lower down, rise and repeat. It is probably also worth excluding by time until you get caught up and your backups have stabilised. Remember that backup space will balloon out by at least 40-50% as you are doing this; all the compressed mails are effectively new data.